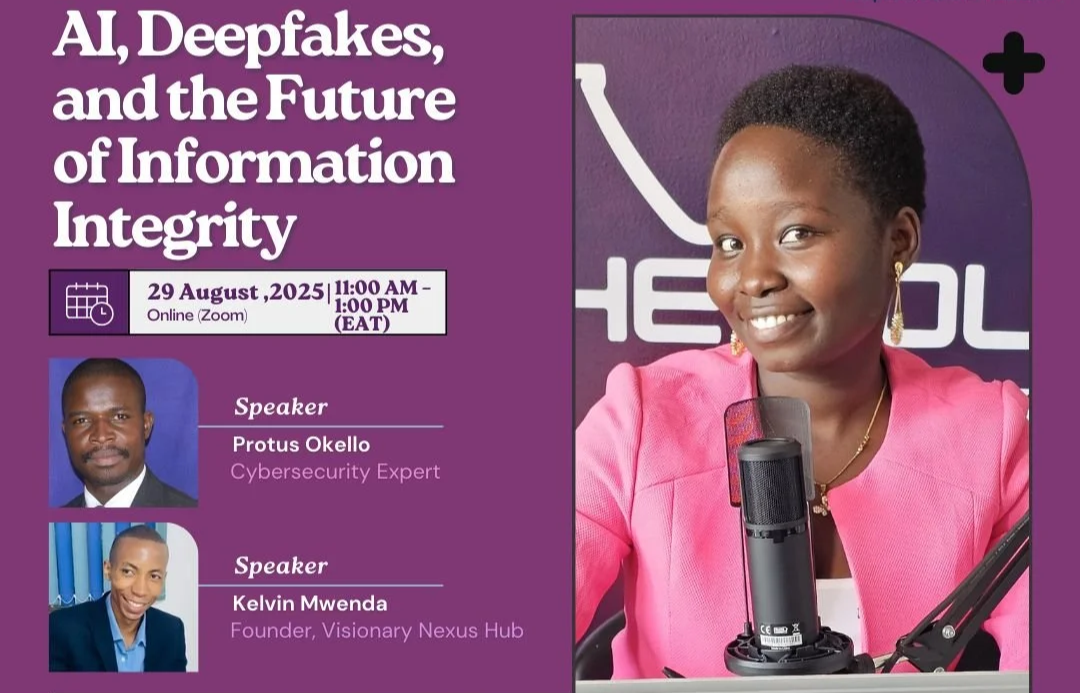

In an age where technology is advancing at an unprecedented pace, our ability to distinguish between truth and fiction is being tested like never before. On Friday, August 29, 2025, The Youth Café hosted a timely and critical webinar titled “AI, Deepfakes, and the Future of Information Integrity” from 11 a.m. to 1 p.m. The session, which was moderated by Shirley Jemeli, brought together a diverse group of participants, including tech professionals, fact-checkers, journalists, electoral bodies, civic educators, and youth leaders, along with speakers Kelvin Mwenda from Visionary Nexus Hub and Protus D. Okello, to address this critical challenge.

The webinar highlighted a defining paradox of the digital age: while Artificial Intelligence (AI) can be a force for good, it is also being weaponized to create highly convincing, yet completely fabricated, content. The speakers emphasized that securing the future of information integrity will require a global, multi-faceted approach and collaboration among technology developers, lawmakers, media organizations, and the public.

Speaker One: Protus D. Okello on the Deepfake Threat Landscape

Protus D. Okello opened the session by laying the foundational understanding of the threat, defining deepfakes as AI-generated videos, audios, or images that manipulate a person's likeness to make them say or do things they never did. He detailed how the power of AI is harnessed to generate synthetic misinformation.

The Mechanics of Manipulation: Mr. Okello explained that these sophisticated forgeries are created using techniques like deep learning, specifically a form of AI called Generative Adversarial Networks (GANs). These models learn a person's expressions, voice, and likeness from massive datasets, then superimpose them onto new video, creating a realistic, fabricated scene. The tools required for this are often web-based and readily available, making the barrier to entry alarmingly low.

A Threat to the Core of Democracy: He underscored that this technology poses a significant threat to information integrity through several vectors:

Widespread Misinformation: Deepfakes manipulate political narratives and spread disinformation on a massive scale, with the initial shock of a convincing fake having devastating real-world consequences.

Erosion of Public Trust (The Liar's Dividend): The proliferation of deepfakes fosters a "liar's dividend," where a public figure can deny a genuine video, claiming it is a fabrication, which leads to a general climate of doubt where the public becomes skeptical of all digital media.

Attacks on Human Perception: Mr. Okello’s presentation highlighted the core principle of the threat: “While hackers attack computers, deepfakes attack human perception.”. This notion emphasizes the profound danger these synthetic creations pose to the very foundation of how we process information.

Global Case Studies in Action: Mr. Okello shared multiple global case studies to illustrate the immediate impact of AI-driven misinformation:

The Slovakian Election (2023): Just two days before the election, a deepfake audio clip went viral, purportedly showing a leading pro-European candidate discussing a plan to rig the election. Due to the legally mandated "silence period," countering the audio was difficult, and the rapid spread may have influenced the outcome.

The U.S. Presidential Primaries (2024): A major incident involved an AI-generated voice clone of President Joe Biden used in a robocall to urge voters in the New Hampshire primary to stay home and not vote. This was a clear case of voter suppression utilizing AI for a sense of authority.

The Dual Edge of AI: Mr. Okello concluded his segment by addressing the double-edged nature of AI. While it is a powerful tool for harm, he noted that it is also one of our best defenses. "Machine learning models can analyze vast amounts of data in real-time, identifying patterns of fake news spread, detecting deepfakes, and flagging suspicious content for human review faster than any manual process could". He noted this technological countermeasures as part of the overall solution.

Speaker Two: Kelvin Mwenda on the African Reality and Voter Vulnerability

Kevin Mwenda, the AI for Good Strategist from Visionary Nexus Hub, grounded the discussion in the African context, providing crucial statistics and specific case studies from the continent. He emphasized that the threat is not theoretical, stating plainly that “Deepfakes function as 'malware for the mind,' corrupting our information ecosystem.”.

The Chilling Statistics: Mr. Mwenda presented alarming statistics that showcase the urgency of the problem:

85% of people fear deepfakes could influence elections.

9 in 10 democracies face organized disinformation campaigns, many using AI.

Most strikingly, only 37% of citizens feel confident they can spot a fake video.

The African Casebook: The presentation shifted to African nations, which have been subjected to increasingly sophisticated synthetic misinformation campaigns:

Kenya: The local impact is significant, evidenced by a 2025 deepfake of President Ruto's fake resignation announcement, which garnered over 1.4 million views. Other incidents included manipulated imagery of the President during protests ("2024 Coffin Deepfake") and fabricated headlines about foreign political figures' influence over Kenyan politics that garnered millions of views ("Fake Trump Headlines").

Nigeria: In 2023, the nation saw AI-generated audio describing an election rigging plot circulated online.

South Africa: The 2024 elections saw deepfakes falsely endorsing local political parties.

Uganda: Manipulated images of opposition leader Bobi Wine were used to spread false narratives.

Why Voters Are Vulnerable:

Trust Networks: People tend to trust content forwarded by friends and family.

Speed vs. Verification: The virality of social media outpaces fact-checkers' ability to respond.

Digital Literacy Gaps: Many users lack the necessary digital hygiene and verification skills.

The Three-Pronged Strategy for Resilience

The solution, as synthesized from both speakers, requires a holistic, multi-faceted approach involving everyone. This strategy focuses on three crucial areas:

1. Technological Countermeasures: AI is now being leveraged to fight AI. Advanced detection software looks for subtle inconsistencies that are not visible to the human eye, such as unnatural blinking, strange lighting, or audio glitches. Beyond detection, solutions like digital watermarking and blockchain technology are being explored to verify the origin and authenticity of media content at the point of creation, establishing authentication standards.

2. Media Literacy Education (The Voter Survival Toolkit): This is the most crucial part of human-centric solutions. Equipping the public with critical thinking skills is a powerful tool. Mr. Mwenda presented a "Voter Survival Toolkit," emphasizing the key principle: "Pause & Verify before sharing any content.". Practical steps to spot deepfakes include looking for:

Unnatural Blinking: Irregular or missing eye blinks in video content.

Lip-Sync Issues: Misalignment between lip movements and spoken words.

Background Anomalies: Shimmering or distortion in video backgrounds.

Audio Quality: Robotic or unnaturally flat voice tones.

Mr. Okello added the importance of questioning the source (Is it from an official outlet?) and checking for emotional alarm bells, as misinformation often uses sensational content to bypass critical thinking.

3. Policy and Governance: Governments and social media platforms must collaborate to create and update laws to address the malicious use of deepfakes. The goal is to enforce a zero-trust approach to media, which promotes constant verification and healthy skepticism to protect the information environment. The speakers recommended utilizing tools like Deepware Scanner, TinEye, Google Lens, and relying on reputable fact-checking organizations such as AfricaCheck and PolitiFact.

Key Takeaways and Conclusion

The participants' feedback highlighted several crucial points, reinforcing the webinar's main message. The most valuable takeaway for many was the realization that technology can be leveraged to counter misinformation and polarization, not just during elections, but as an ongoing effort. Attendees also found a deeper understanding of AI and deepfakes to be a vital piece of the discussion.

Perhaps most significantly, the feedback emphasized that the power of these new technologies, while capable of shaping narratives, is ultimately in the hands of the people. With the right information, individuals can empower these tools to serve citizens better, especially when it comes to sharing political truths and not lies, thereby combating the spread of misinformation on social media.

Ultimately, the most crucial defense is a vigilant and well-informed public. The future of information integrity is uncertain, but by raising awareness, using available tools, and adopting a mindset of "pause and verify," we can all play a role in protecting our democracies.